Building an AI-Powered RC Car with TensorFlow Lite on Raspberry Pi: A Step-by-Step Tutorial

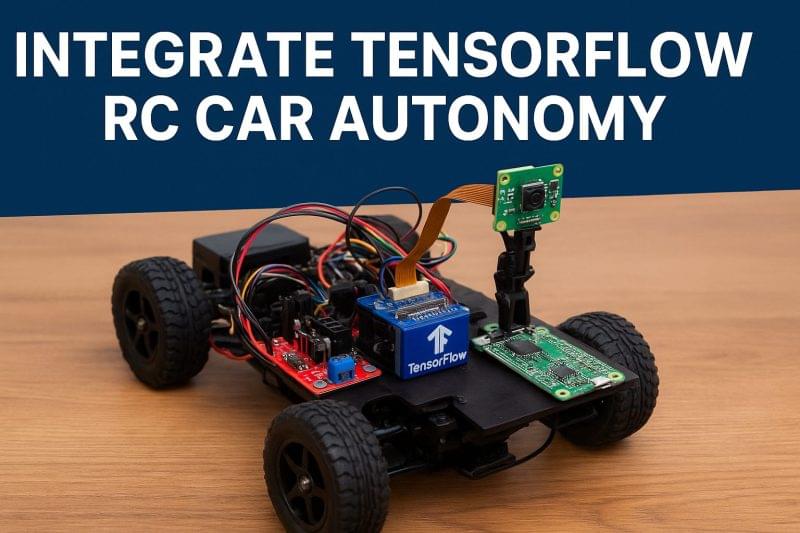

Building an AI-powered RC car is easier than you might think! We can use TensorFlow, a popular machine learning framework, to let a toy car “see” and drive by itself. In this tutorial, we’ll explain TensorFlow basics. We will show how to set up a tiny computer (a Raspberry Pi Zero 2 W) with a camera. We will also demonstrate using motors. Then we’ll write simple Python code to load a TensorFlow Lite model that tells the car how to move. Finally, we’ll test our setup and discuss advanced improvements. This guide uses everyday language and clear steps, so anyone can follow. Whether you’re a beginner in AI, machine learning, or robotics, this comprehensive guide will help you integrate TensorFlow Lite into your RC car project for autonomous driving capabilities.

TensorFlow is an open-source platform for machine learning. Google describes TensorFlow as “an end-to-end open source platform for machine learning”[1]. It has lots of tools and libraries for building “smart” apps. However, the full TensorFlow library is big and heavy for tiny devices. That’s where TensorFlow Lite comes in. TensorFlow Lite is a lightweight version made for mobile and embedded gadgets[2]. In other words, TensorFlow Lite is designed to run on small systems like smartphones or Raspberry Pis. This makes it ideal for edge computing applications in robotics and IoT devices, enhancing performance without requiring constant cloud connectivity.

Why do we use TensorFlow at all? With TensorFlow Lite, our RC car can do tasks like object detection, line following, or obstacle avoidance. For example, a model could recognize a stop sign. It could also see a colored line on the floor. Then, it decides to turn or stop the car. All the “thinking” happens by running the TensorFlow Lite model on the Pi. Think of it as the car’s brain. The camera sees the world. TensorFlow processes that image. The car’s code decides how to drive. This setup enables real-time decision-making, which is crucial for autonomous vehicles and DIY robotics projects.

TensorFlow Lite and Why We Use It

TensorFlow Lite is designed to be fast on small devices. The TensorFlow website says it is “a lightweight solution for mobile and embedded devices”[2]. This means it can run neural networks (trained machine learning models) efficiently with less computing power. For a tiny RC car, that’s crucial. We can’t use a super heavy program – it would be too slow or not fit in memory. TensorFlow Lite optimizes for low-latency inference, making it suitable for battery-powered devices like RC cars.

Here are some key points about TensorFlow and TensorFlow Lite:

- TensorFlow (TF): The full version, great for training and running big models, used by Google for Search, Gmail, Photos, etc[1]. But it needs a lot of CPU/GPU power.

- TensorFlow Lite (TFLite): A smaller, stripped-down version for inference (running already-trained models) on devices like phones, Pi, or Arduino. It can run on the CPU of a Raspberry Pi or even a microcontroller. (Models often are “quantized” to shrink size and speed up.)

- Why TensorFlow Lite: It makes the car’s on-board computer “smarter” without needing a big server. We do the heavy training on a PC/cloud beforehand, then copy the small model file to the car’s Pi.

| Feature | TensorFlow | TensorFlow Lite |

|---|---|---|

| Purpose | Full machine learning platform for training and inference | Lightweight inference on edge devices |

| Size | Large, resource-intensive | Small, optimized for low power |

| Use Cases | Cloud servers, desktops for model development | Mobile apps, embedded systems like RC cars |

| Performance | Requires high CPU/GPU | Efficient on limited hardware |

| Examples | Google Search, Photos | Object detection in robotics |

In a tutorial I read, a TensorFlow Lite object detector (SSD MobileNet) ran about 1 frame per second on a Pi 3B+. The performance was quite slow. It achieved 5 fps on a Pi 4[3]. That’s not blazingly fast, but good enough for simple tasks. (And using an Edge TPU accelerator can boost it – we’ll mention that later.) Just remember: more complex tasks need a faster board. An Adafruit guide warns about hardware requirements. “TensorFlow vision recognition will not run on anything slower [than a Pi 4 or Pi 5]”[4]. So a Pi Zero is slower (single-core 1 GHz), but the new Pi Zero 2 W with 4×1 GHz cores does better (see below). These performance metrics highlight the importance of choosing the right hardware for your AI RC car project to ensure smooth operation.

In short, TensorFlow Lite lets our RC car use AI locally. The car observes its surroundings through the camera. It processes these visuals with a model, like one trained to find lanes or objects. Then, it makes driving decisions immediately. We don’t send data to the cloud – it’s all on-board. This is key for real-time autonomy. Local processing also improves privacy and reduces latency, making it a preferred choice for embedded AI applications in robotics.

Hardware Setup

Before coding, gather the hardware. You’ll need a small computer, a way to see the environment, a power source, and drive components. Here’s the basic list:

- Raspberry Pi Zero 2 W (or similar Pi): This is the brain. The Zero 2 W is a tiny Raspberry Pi with a quad-core 1 GHz CPU and 512 MB RAM[5]. It runs Linux and can install Python and TensorFlow Lite. (The older Pi Zero was single-core and much slower; the Zero 2 W is much better.)

- Why this Pi? It’s very small and cheap yet can run TF Lite, especially for simpler models. You could also use a Pi 4 for more speed, but the Zero keeps the car small.

- Buy it on Amazon: Raspberry Pi Zero 2 W (Wireless) – Amazon.

- Camera module: We need vision. A Raspberry Pi Camera V2 (5MP) or any USB webcam will do[6]. This camera plugs into the Pi’s camera slot or USB.

- Purpose: Captures video frames that TensorFlow will process.

- Motor driver (H-bridge): The Pi’s GPIO pins can’t power motors directly (too much current). Use a motor driver board like the L298N (dual H-bridge) to control DC motors[7]. This driver can take low-power signals from the Pi and switch the high current from a battery to the motors.

- Buy it on Amazon: L298N Motor Driver Board – Amazon.

- Why needed? Raspberry Pi GPIO pins can only supply a few milliamps. The L298N lets you connect a separate battery (like 6–12V) for the motors while using Pi pins for direction (forward/back/stop).

- DC Motors and Wheels: Typically two drive motors (with wheels) for moving forward/back and turning. Often RC car chassis use two rear-wheel drive motors.

- Chassis/Frame: A small car frame to mount everything. You can use a toy RC car or a DIY kit. The electronics sit on top.

- Power supply: A battery pack. For example, a LiPo battery (~7.4V) can power the motors, with the L298N’s regulator providing 5V for the Pi. Alternatively, use a 5V battery or USB battery pack just for the Pi.

- Tip: Make sure you isolate power. If a motor drawing current causes a voltage drop, the Pi can reset. Sometimes builders use a separate battery for the Pi and another for the motors, or add capacitors/diodes for stability.

- Misc: Jumper wires, resistors (for safety), solderless breadboard or pins. Also, a microSD card (16GB or more) for the Pi OS.

Here’s a quick hardware setup checklist:

- Pi Zero 2 W setup: Install Raspberry Pi OS (Lightweight Linux). Enable camera in raspi-config. Connect to Wi-Fi.

- Attach the camera: Plug the Pi Camera into the Pi’s CSI slot. If using a USB cam, plug it into the Pi’s USB port.

- Mount the motor driver: Connect the L298N board near the Pi. Wire the Pi’s GPIO (logic) pins (e.g. 17,22, etc) to the L298N’s IN1–IN4 inputs for controlling left and right motors.

- Wire the motors: Connect your motors to the L298N outputs (Motor A & B). Connect the L298N’s 5V output to the Pi’s 5V input if it is present. Do so only if you have the exact 5V and correct jumper settings. Otherwise, use Pi’s own 5V supply.

- Power: Connect your battery pack’s positive to the L298N’s + supply. Connect the positive to Pi Vin if needed. Connect the ground to both Pi and L298N ground. Always share a common ground between Pi and motor driver.

- Test motors: Before adding TensorFlow, write a simple Python script or use raspi-gpio to turn motors forward/back as a test (e.g., the code in [20†L105-L113] shows basic GPIO outputs for forward/back). This example code snippet demonstrates fundamental motor control using Raspberry Pi GPIO pins, which is essential for verifying hardware connections before integrating AI components.

By now you should have a “dumb” RC car: camera on front, Pi strapped on, motors wired through the L298N. You can control the motors with Python and even see camera images (e.g. raspistill or fswebcam). Next we’ll add the TensorFlow Lite “brain” part. This hardware foundation is critical for any AI-powered robotics project, ensuring reliable integration of computer vision and motor control.

Installing TensorFlow Lite and Dependencies

With the hardware ready, we need software. The Pi will run Python code using TensorFlow Lite. We must install:

- Raspberry Pi OS & Updates: Make sure the Pi OS is up to date:

- sudo apt update

sudo apt upgrade

- Enable camera and SSH (optional): Use sudo raspi-config to enable the camera interface and SSH (so you can work headless). Reboot if prompted.

- Python and pip: The Pi Zero usually has Python 3 installed. Install pip if missing:

- sudo apt install python3-pip

- TensorFlow Lite runtime: We can install TensorFlow Lite via pip. The easiest way is to use the pre-built tflite-runtime package (a slim version). For example:

- pip3 install tflite-runtime

- Or install full TensorFlow if needed (though heavy): pip3 install tensorflow. For performance on Pi, tflite-runtime is preferred. Follow the official guide for Raspberry Pi if you need a specific version.

- OpenCV or Camera libraries: If you plan to process images easily, install OpenCV:

- pip3 install opencv-python

- (This is optional, but OpenCV’s cv2 helps read and manipulate frames from the camera.)

- GPIO library: Ensure you can control pins. The standard library is RPi.GPIO (usually pre-installed). Or use gpiozero for simplicity.

- Download a pre-trained model: We need a TensorFlow Lite model. You can train your own, but to start, use a ready-made model. For example, use a MobileNet SSD model for object detection or a custom model for line following. TensorFlow provides sample models (for instance, COCO SSD MobileNet V1). Download the .tflite file and any label file. Put them on the Pi (e.g. in /home/pi/models/).

- Note: If your goal is simple line following, you might use a model that detects a line or track, rather than general objects. The approach is similar: capture an image and let the model output what to do.

At this point, your Pi should have everything installed: TensorFlow Lite runtime, camera libraries, and a model file. You can test by running a short Python command to load the model, e.g.:

import tflite_runtime.interpreter as tflite

interpreter = tflite.Interpreter(model_path=”model.tflite”)

interpreter.allocate_tensors()

If no errors occur, TFLite is set up. This installation process ensures compatibility and optimal performance for running machine learning models on resource-constrained devices like the Raspberry Pi in your AI RC car.

Writing the Autonomy Code

Now comes the fun part: writing a Python program that uses TensorFlow to drive the car. The basic idea is:

- Capture Image: Take a frame from the camera.

- Preprocess: If needed, resize or normalize the image to match the model’s input requirements.

- Run Inference: Feed the image into the TensorFlow Lite interpreter to get results (e.g., detected objects or steering direction).

- Make Decision: Based on the model’s output, decide how to move. For example:

- If the model finds a red stop sign, issue a command to stop the motors.

- If using line following, see where the line is and turn wheels accordingly.

- If it sees an obstacle ahead, maybe turn left or stop.

- Control Motors: Use GPIO to set the motors’ direction and speed. For example, setting certain pins HIGH/LOW to go forward, backward, or turn. (See [20†L105-L113] for an example of how to set GPIO outputs for motor control.) This code reference illustrates basic control logic that can be expanded for more complex autonomous behaviors.

A simple loop might look like:

- Initialize camera and TFLite interpreter.

- Loop:

- Grab a frame (frame = camera.capture()).

- Convert it to the format needed (resize to 300×300 if using MobileNet SSD, etc.).

- interpreter.set_tensor(…) to input the image.

- interpreter.invoke() to run the model.

- Read output (e.g., detected class and score).

- If an object of interest is detected (or a line found), decide: forward, turn, or stop.

- Send signals to motor driver:

- import RPi.GPIO as GPIO

# Example pins

GPIO.output(IN1, True) # set one motor forward

GPIO.output(IN2, False)

# similar for other motor… - End loop (or exit on keypress).

It’s a good idea to first test each part separately. For example, write a small script to just control the motors (without TensorFlow) to ensure they move correctly. Then test capturing a camera image and displaying it. Then test running the model on one image and printing the result. After those parts work, combine them. Modular testing helps debug issues efficiently in AI robotics development.

The key is keeping the loop fast. Even a few frames per second is enough for a toy car. If inference is too slow, the car will be sluggish. (Later we’ll discuss speeding it up.)

For detailed code examples, refer to online guides like Digi-Key’s tutorial on TensorFlow Lite object detection for Raspberry Pi. For detailed code examples, refer to online guides like Digi-Key’s tutorial on TensorFlow Lite object detection for Raspberry Pi[9]. They outline installing libraries and running a MobileNet SSD model. In simple terms, just follow their steps to prepare the environment. Then, adapt the final step. Replace printouts with motor commands. These resources provide practical insights into implementing computer vision for autonomous RC cars.

Autonomy Testing

After writing the code, it’s time to test your autonomous RC car in the real world:

- Dry Run: Keep the car off the ground while motors spin free. Run your code to see the motor response. Check that the motors respond as expected to different inputs. You can simulate detections (e.g., temporarily hard-code a command) to test forward/backward turns.

- Controlled Environment: Set up a simple track or area. For obstacle avoidance, place a chair or box and see if the car stops or turns away. For line following, draw a black line on white tape and see if the car stays on it.

- Monitor Output: It helps to print log messages to the console. You can also run the code with a monitor/SSH terminal. This way, you see what the model thinks. For example, print “Obstacle detected” or “Going forward.”

- Safety First: Keep one hand near to hit a kill switch or unplug power if something goes wrong.

You might notice issues while testing. Examples include the car being too fast, turning incorrectly, or the model misdetecting. Make small adjustments. You can slow down motor PWM. Tweak the camera angle. Collect more training images if you made a custom model. Testing is an iterative process. Iterative testing refines the AI model’s accuracy and the overall system’s reliability in real-world scenarios.

Remember, our goal is a robot that reacts intelligently. For example, one might program: “If the object “person” is detected ahead, stop and wait.” Or “If the line is off-center, turn motor speeds to re-center.” The TensorFlow model’s output (bounding boxes, classes, or steering angle) drives those actions.

For reference, TensorFlow’s own docs highlight how object detection helps robots: it can track people or obstacles[10]. On RC cars, following a person or object is a popular project (often called a “follow-me robot”). Our testing stage is where you see the theory in action. This demonstrates the practical applications of TensorFlow Lite in enhancing robotic autonomy.

Advanced Tweaks and Tips

Once the basic system works, you can make it better and faster:

- Edge TPU (Coral USB Accelerator): If you want much faster inference, add a small hardware accelerator. One option is the Google Coral USB Edge TPU[11]. This is a USB dongle. It plugs into the Pi. It can run TensorFlow Lite models extremely fast. This is especially true for MobileNet-style models. In fact, it’s designed for TF models: “Models are built using TensorFlow. Fully supports MobileNet and Inception architectures…”[11]. Using a Coral USB accelerator can give you many more FPS than the Pi alone.

- Affiliate: Coral USB Accelerator (Edge TPU) – Amazon.

- Tip: If you use the Coral, you’ll need to use the special pycoral library to run models on the TPU. But it significantly speeds up vision tasks.

- Optimize Models: Use a quantized TFLite model (lower precision) for speed. The models from TensorFlow Lite’s model zoo often have a “quantized” version. Quantized models (8-bit) run faster on CPU. See TensorFlow Lite model optimization docs.

- Sensor Fusion: Besides the camera, add simple sensors: e.g., an ultrasonic distance sensor in front can detect very close obstacles. The camera might miss these obstacles or be too slow to see them. Infrared line sensors can help with line following as a backup. Combining camera and IR/ultrasonic data can improve safety.

- Improve Control: Add feedback loops, like reading actual wheel encoder counts to estimate speed or distance. (Many RC projects add encoders for better control.)

- Tweaks for Stability: Motor control can use PWM (pulse-width modulation) for variable speed instead of just on/off. You can generate PWM from the Pi GPIO or use a small microcontroller (like Arduino) for PWM.

- Training Your Own Model: In the long run, train a custom model for your specific track or objects. For example, you could use a tool like Roboflow. Alternatively, you could use TensorFlow Object Detection API. Use these tools to train on images of the obstacle you care about. Then convert it to TFLite.

Many hobbyists also mention frameworks like DonkeyCar or Google’s AIY Vision Kit for inspiration. (As one builder noted, their “rover” used a Coral Edge TPU and a software framework adapted from the Donkey Car project[12]. This shows even parents and kids build similar robots!) These advanced techniques can elevate your AI RC car from a basic prototype to a sophisticated autonomous vehicle.

Call to Action

You now have the essentials to build an autonomous RC car using TensorFlow! Here are some next steps and resources:

- Try Code Samples: Check out the TensorFlow Lite Tutorial for Raspberry Pi for example code. The Raspberry Pi Camera Module guide is helpful for setting up the camera.

- Experiment: Take your car outdoors or on a larger track. Try adding new behaviors (e.g. line following vs. object avoidance). The experience of testing will teach you a lot.

- Share & Learn: Look at Raspberry Pi forums or TensorFlow community discussions. Many makers have posted their RC car projects (search terms like “Raspberry Pi self-driving car TensorFlow”).

- Hardware: If you need parts, consider getting the Raspberry Pi Zero 2 W for the CPU, the Coral USB Accelerator for AI speedup, and an L298N Motor Controller. These components are great for beginners (affiliate links included).

Affiliate Disclosure: This post contains Amazon affiliate links). If you purchase through these links, we earn a small commission (at no extra cost to you). We only recommend products we like!

In summary, by combining a lightweight ML model (TensorFlow Lite) with a small computer (Raspberry Pi) and drive hardware, your toy car can gain a “brain” and drive itself. We covered the hardware setup, installing TensorFlow Lite, writing the control code, and testing the autonomous car. With these tips and links, you’re ready to integrate TensorFlow into your own RC car project and keep learning. Good luck and have fun building your self-driving RC car!

References

[1] TensorFlow | Google Open Source Projects

https://opensource.google/projects/tensorflow

[2] Install TensorFlow 2

https://www.tensorflow.org/install

[3] [6] [8] [9] [10] How to Perform Object Detection with TensorFlow Lite on Raspberry Pi

[4] cdn-learn.adafruit.com https://cdn-learn.adafruit.com/downloads/pdf/running-tensorflow-lite-on-the-raspberry-pi-4.pdf

[5] Amazon.com: Raspberry Pi Zero 2 W (Wireless / Bluetooth) 2021 (RPi Zero 2W) : Electronics

[7] Interfacing L298N H-bridge motor driver with raspberry pi | by Sharad Rawat | Medium https://sharad-rawat.medium.com/interfacing-l298n-h-bridge-motor-driver-with-raspberry-pi-7fd5cb3fa8e3

[11] Amazon.com: Google Coral USB Edge TPU ML Accelerator coprocessor for Raspberry Pi and Other Embedded Single Board Computers : Electronics

[12] The Odyssey: Building an Autonomous “Follow-Me” Rover | by J.P. Leibundguth | Medium

https://jleibund.medium.com/the-odyssey-building-an-autonomous-follow-me-rover-b9a14f6bf107